Business challenge before the tech team migrating to cloud or new environment

So a team in a trading firm has been asked to move their low latency performance systems to cloud which will probably entail moving to newer version of OS, new kind of environment settings and so on. The challenge before the team is that it’s going to take months to get the first setup running which would mean migrating a few apps and checking if the whole thing works. The key question is whether the new system is setup for providing desired performance. How would it fare against a physical local existing setup? What if we used hybrid cloud vs a private cloud vs a public cloud?

What if the team realizes after months of work that it will not work for a particular low latency system? What if team finds out during testing that some of the system level settings have not been applied leading to performance degradation? How much delay it will cause for the project?

Is there a way out to mitigate this risk and find the comparison of various environments upfront?

What is the suggested solution?

The blog briefly mentions about NAS Parallel Benchmarks(NPB) which have become standards for comparing environments. Blog also shows how to setup a NPB, what tools and setups are needed to fine tune the system, what basic testing can be done before the team starts investing large efforts. Blog focuses on very specific use case of CPU scheduler comparison & tunings, but similar exercise can be expanded with benchmarks.

What is NAS Parallel Benchmark & why it can be leveraged by owners of low latency systems:

“The NAS Parallel Benchmarks (NPB) are a small set of programs designed to help evaluate the performance of parallel supercomputers”. More about this here.

Though the word supercomputers seems intimidating, there are various versions of the test applications that can be built suitable for testing small workstation to very large distributed systems to large supercomputers. So the scientists at NASA would have faced the same dilemma – How to benchmark systems before migrating the actual systems. Sounds similar to problem before our tech team planning to move to cloud. Huhh?

How to setup and where to start to use benchmarks

So, I did a small test on my Azure VM running RHEL 7. I tried using it to see some sample usage of how this can be used in a real corporate environment.

Results of NPB MPI Class C on my VM Linux Perf CPU sched parameters

The bigger picture on how to use NPB

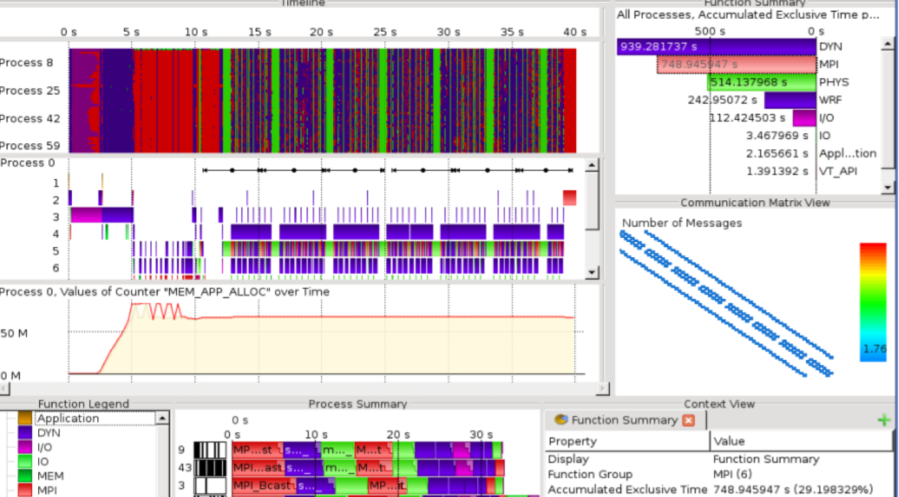

Picture above shows results of NPB class C when run on my AZURE VM running RHEL 7. The picture on left shows the result printed when running NPB-MZ-MPI C class tests in terms of time taken, number of Mega Floating point operations. Similar tests can be run on various environments Hybrid cloud, Private, Physcial servers to compare the environments to see how environments are faring against each other.

Picture on right shows the Linux perf data for CPU scheduling parameters. If any anomaly is found in performance, the visualizations from various environments should be compared such that cloud environment has the similar parameters as the physical hosts server.

What you need to run the setup

a. Perf utility of linux compiled with special parameters to support ctf conversion

b NPB benchmark test suite.

c. Trace compass and that’s it.

Please leave questions and comments on if you have a similar use case in your real world environment.

References & further reading:

https://www.nas.nasa.gov/publications/npb.html

Nasa’s tests with AWS – https://www.nas.nasa.gov/SC18/demos/demo5.html